Hey @easbar, the attached is a gpx file I’ve used to test GH’s map matching: east.gpx (341.5 KB)

Did you try map-matching the same trajectory with unmodified GraphHopper using the OSM map for South Korea? Is it also slow?

Your suggestion gives much faster result than my previous attempts using the custom network.

2021-05-24 22:07:11.069 [main] INFO com.graphhopper.GraphHopper - version 3.0|2021-05-17T02:51:00Z (5,17,4,4,5,7)

2021-05-24 22:07:11.072 [main] INFO com.graphhopper.GraphHopper - graph car|RAM_STORE|2D|no_turn_cost|5,17,4,4,5, details:edges:1 539 520(47MB), nodes:1 149 086(14MB), name:(4MB), geo:6 843 009(27MB), bounds:121.38442295903913,135.42200666864233,33.114980528838494,43.11188034218282

/Users/east.12/mapmatch/east.gpx

matches: 285, gps entries:5755

gpx length: 105749.83 vs 105574.625

export results to:/Users/east.12/mapmatch/east.gpx.res.gpx

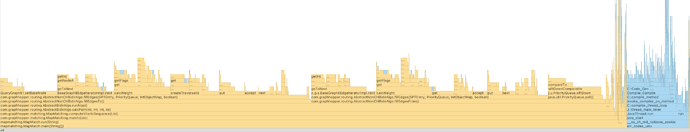

gps import took:0.12338149s, match took: 19.903625

However, as complained in this reddit post, Korea’s OSM is not good as the ones from Korean domestic IT giants like Kakao and Naver in terms of details.

// when importing south-korea-latest.osm.pbf

graph car|RAM_STORE|2D|no_turn_cost|5,17,4,4,5, details:edges:1 539 520(47MB), nodes:1 149 086(14MB), name:(4MB), geo:6 843 009(27MB), bounds:121.38442295903913,135.42200666864233,33.114980528838494,43.11188034218282

// when importing my own data

graph car|RAM_STORE|2D|no_turn_cost|5,17,4,4,5, details:edges:3 226 342(99MB), nodes:2 622 258(31MB), name:(1MB), geo:15 392 663(59MB), bounds:124.61939040269314,131.86962521632003,33.17599549541317,38.58458226076817